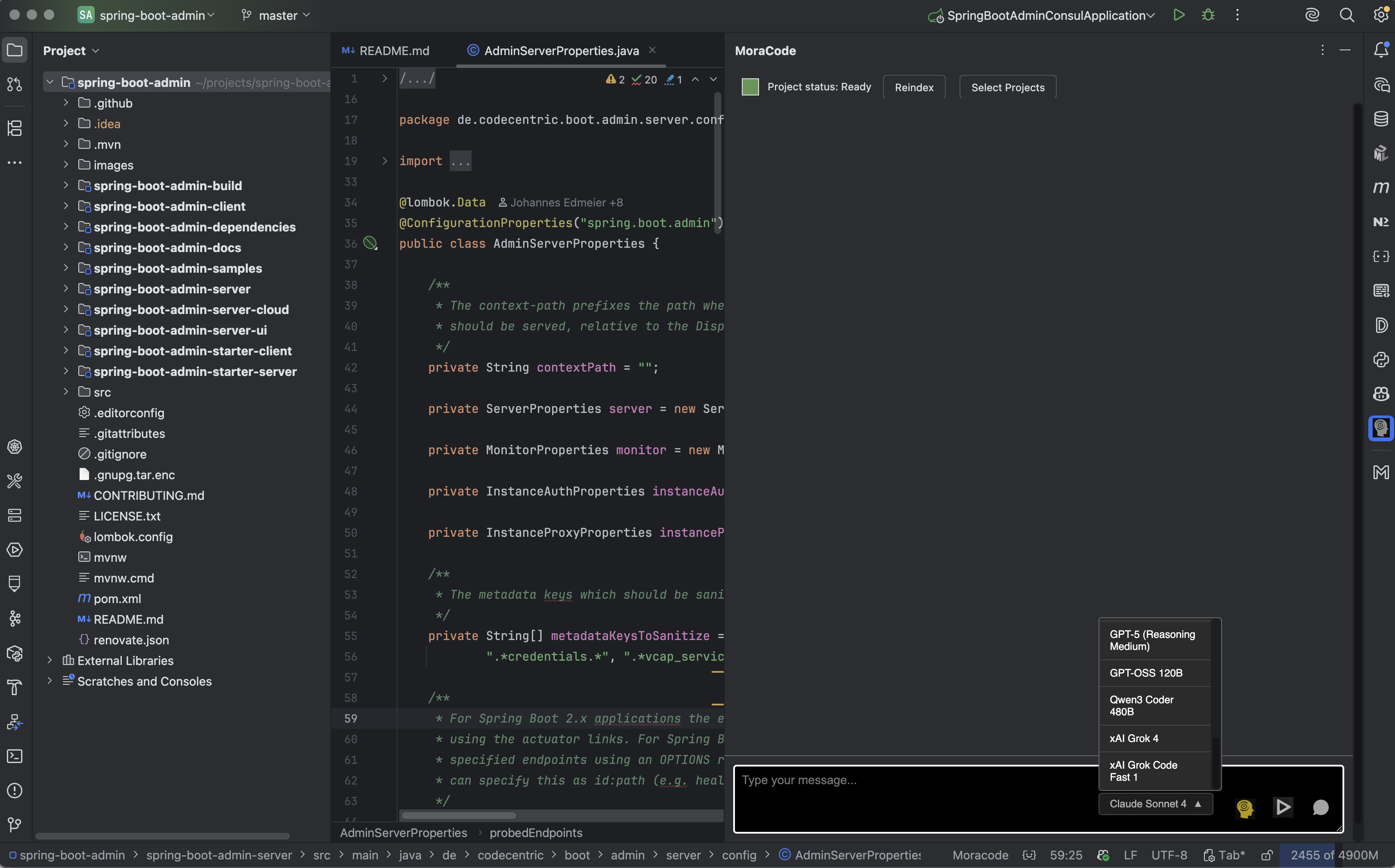

CLIENT-SIDE AI CODING AGENT

WITH MULTI-REPO CONTEXT

We only send minimal relevant snippets to your LLM and never retain your code. AI indexing spans multiple repositories in one conversation—ideal for microservices with cross-service changes.

Code/context is transmitted to your chosen LLM via your API key. Moracode does not store code, prompts, or outputs.

HOW IT WORKS

Index precisely

AI indexing targets only files/symbols that task needs across repos.

Connect repos

Select the services/libs you want in the same workspace (e.g., Service-A, Service-B, Shared-Lib).

Plan with context

The agent proposes cross-service steps grounded in that exact, multi-repo context.

Execute safely

Review and apply changes with full transparency and control.

DATA USAGE

What's sent

Only minimal, relevant snippets for the current task.

Where it goes

Directly to your LLM via your API key (BYOK).

What we retain

Nothing — no server-side retention.

BUILT FOR TEAMS THAT VALUE SECURITY, PERFORMANCE, AND TRANSPARENCY

We are revolutionizing code analysis by putting privacy and security first. Our platform empowers developers to leverage AI while maintaining complete control over their code and data.

VALIDATE IT WHERE IT MATTERS: LARGE CODEBASES, TIGHT PRIVACY, REAL WORKLOADS

JETBRAINS PLUGIN

JOIN OUR COMMUNITY & SHARE YOUR EXPERIENCE

GET IN TOUCH DIRECTLY

Get in touch directly with our team